Why you can trust Tom's Hardware

Modern GPUs aren't just about gaming. They're used for video encoding, professional applications, and increasingly they're being used for AI. We've revamped our professional and AI test suite to give a more detailed look at the various GPUs. We'll start with the AI benchmarks on the Arc B570, as those tend to be more important for a wider range of users.

Procyon has several different AI tests, and for now we've run the AI Vision benchmark along with two different Stable Diffusion image generation tests. The tests have several variants available that are all determined to be roughly equivalent by UL: OpenVINO (Intel), TensorRT (Nvidia), and DirectML (for everything, but mostly AMD). There are also options for FP32, FP16, and INT8 data types, which can give different results. We tested the available options and used the best result for each GPU.

Arc B570 performs well in the Stable Diffusion tests, with the only faster GPU in our budget to midrange collection for SDXL being the B580. The 4060 does pull ahead in the older and less taxing SD 1.5 test, but the value proposition looks strong on the B570 for these AI workloads. Note that the SDXL test failed to run on the RX 6600, after multiple tries — it needs more VRAM, apparently.

The AI Vision test uses older workloads like ResNet50 to test performance, and again the B570 takes second place behind the B580. How applicable these results are in the real world remains debatable, but for properly optimized software Battlemage looks good.

ML Commons' MLPerf Client 0.5 test suite does AI text generation in response to a variety of inputs. There are four different tests, all using the LLaMa 2 7B model, and the benchmark measures the time to first token (how fast a response starts appearing) and the tokens per second after the first token. These are combined using a geometric mean for the overall scores, which we report here.

While AMD, Intel, and Nvidia are all ML Commons partners and were involved with creating and validating the benchmark, it doesn't seem to be quite as vendor agnostic as we would like. AMD and Nvidia GPUs only currently have a DirectML execution path, while Intel has both DirectML and OpenVINO as options. We reported the OpenVINO numbers, which are quite a bit higher than the DirectML results.

The B570 takes the second spot again, after the B580. All of the Arc GPUs have a fast time to first token result, which again seems to stem from the OpenVINO path. For the average tokens per second, Battlemage takes first and second, but AMD's 7600 XT ranks third, ahead of the 4060. The older RX 6600 falls far behind, both in time to first token and average tokens per second.

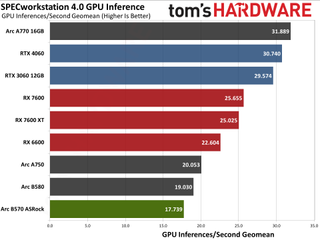

We'll have some other SPECworkstation 4.0 results below, but there's an AI inference test composed of ResNet50 and SuperResolution workloads that runs on GPUs (and potentially NPUs, though we haven't tested that). We calculate the geometric mean of the four results given in inferences per second, which isn't an official SPEC score but it's more useful for our purposes.

Again, software optimizations will likely make or break performance here. The A770 comes out on top, followed by the 4060 and 3060. All three of the AMD GPUs fall in the middle, then the A750 trails the A770 by a larger than normal margin, and the Battlemage GPUs take the two bottom slots.

For our professional application tests, we'll start with Blender Benchmark 4.3.0, which has support for Nvidia Optix, Intel OneAPI, and AMD HIP libraries. Those aren't necessarily equivalent in terms of the level of optimizations, but each represents the fastest way to run Blender on a particular GPU at present.

The B570 and Battlemage results once more fall behind some GPUs we would normally expect them to beat, like the A750 and A770. Nvidia's 4060 and 3060 place first and second, while AMD's GPUs bring up the rear. As this test should stress the hardware RT units, it could indicate that the reduced number of RT units (relative to Alchemist) is to blame, or it could be a lack of tuning in the current Intel drivers.

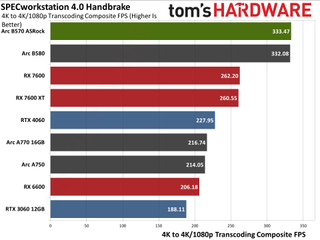

SPECworkstation 4.0 has two other test suites that are of interest in terms of GPU performance. The first is the video transcoding test using HandBrake, a measure of the video engines on the different GPUs and something that can be useful for content creation work. Here we use the average of the 4K to 4K and 4K to 1080p scores.

Here the Arc B570 does very well, matching the B580 and easily surpassing the other GPUs. You can also see the different generations of video codec hardware at play, with the two 7600 cards basically tied while the 6600 is quite a bit slower. Battlemage appears to have some improvements that help relative to Alchemist in this particular workload as well.

Again, it's disappointing that Intel killed off the studio portion of its drivers that allowed users to easily capture video content. OBS hasn't worked for me on the Battlemage cards, perhaps drivers again, or possibly just because it's more complex and I didn't set it up correctly.

Our final professional app tests consist of SPECworkstation 4.0's viewport graphics suite. This is basically the same tests as SPECviewperf 2020, only updated to the latest versions. (Also, Siemen's NX isn't part of the suite now.) There are seven individual application tests, and we've combined the scores from each into an unofficial overall score using a geometric mean.

The Arc B570 lands at the bottom of the overall chart, and does somewhat poorly relative to the other GPUs in several of the individual tests. 3dsmax, Creo, and Maya have the B570 in last place, often quite a bit behind the B580. AMD meanwhile offers a strong point for its GPUs in this set of tests, so if you use any of these professional applications, check the individual results.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Intel Arc B570: Content Creation, Professional Apps, and AI

Prev Page Intel Arc B570 Ray Tracing Gaming Performance Next Page Intel Arc B570: Power, Clocks, Temps, and NoiseJarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Gururu If this becomes more available than the b580, I would happily put this into my little brother or sister's new build. $200-250 is absolutely budget and I guess the performance is better than integrated solutions.Reply -

GenericUser2001 Any thoughts on doing a performance test of this and the B580 using a more budget processor? Quite a few other websites have been retesting the B580 and found that it has some sort of driver overhead issues, and when paired with more modest CPU like a Ryzen 5600 the B580 often ends up falling behind a Radeon 7600 or Geforce 4060 on the same games it leads in when paired with a high end CPU.Reply -

Elusive Ruse Thanks for the review Jarred, I like that you don’t skip higher resolutions and RT which might not be as relevant for a budget GPU but in my opinion they offer good insight on overall improvement gen-on-gen.Reply

The price point is pretty good and I think many buyers would rather buy a new release with potential to get higher performance in the future with better drivers than buying a used card or an older generation card for the same money and performance. -

das_stig am I misinterpreting the chart or why buy a B5x0 when the A7x0 is superior in most things including price, except for extra wattage and boost clock?Reply -

Notton Reply

If you're looking at the same charts I am looking at, yes.das_stig said:am I misinterpreting the chart or why buy a B5x0 when the A7x0 is superior in most things including price, except for extra wattage and boost clock?

B570 > A750, B580 > A770 at a majority of games.

There are some exceptions where this flips around on some settings, like TLoU 1080p ultra, but reverts to B570 being dominant at 1080p medium. -

eye4bear Day before yesterday I managed to order and pick-up after work one of only 3 B580s at the Miami Micro Center, and the other two were gone yesterday on their web-site. Worked late last evening, so haven't had a chance yet to install it. Replacing an Arc A380. If I find out anything interesting, will let you all know.Reply -

JarredWaltonGPU Reply

It all takes time, the one thing I definitely don't have right now. There's a reason RTX 3050 isn't in the charts either. LOL. But eventually, it's something I'd like to investigate... and will probably be stale before I could get around to it. Because it's time to start testing the extreme GPUs in preparation for RTX 5090 and 5080. And after that? The high-end cards in preparation for RTX 5070 Ti and 5070, plus RX 9070 XT and 9070.GenericUser2001 said:Any thoughts on doing a performance test of this and the B580 using a more budget processor? Quite a few other websites have been retesting the B580 and found that it has some sort of driver overhead issues, and when paired with more modest CPU like a Ryzen 5600 the B580 often ends up falling behind a Radeon 7600 or Geforce 4060 on the same games it leads in when paired with a high end CPU.

I should have more ability to do off the beaten path testing in about two months, in other words. <sigh> But it's good to be busy, even if we don't have enough time between getting cards and the launch dates. -

-Fran- Thanks for the comprehensive data as always, Jarred.Reply

And kind of sad the conclusion from most people reviewing it is: "well, the B580 is the better pick if you can find it at MSRP". I wonder if Intel can make this card hit a lower price point? I mean, without actually losing money. Sounds tricky to do.

And I'm surprised OBS didn't work for you. I would have imagined they'd be exposing the capabilities of Battlemage the same way as Alchemist for the encoders. Well, I hope a patch is coming, since that's a big miss for me at least :(

Regards. -

rluker5 I've got a B580 and noticed a couple of bugs in overclocking.Reply

1. my PC doesn't like to wake from sleep with an overclock applied to the B580. It will wake, not be happy and restart which turns off the oc. No problem if no oc. I am running a pretty heavy undervolt on my 13900kf and it is stable in everything else, but maybe is giving this particular boot issue. Also not a fresh OS install.

2. The ram oc usually doesn't take 21 Gbs right away. I have to do 20, sometimes 20.1 then it takes 21 and the change shows up in GPUZ and everything else.

I just thought of the ram oc finickyness reading this article and how I would want to oc vram if I had a B570. Hopefully few others have these issues but I'm seeing them so I brought them up.

Also my B580 has been a bunch faster than my A750 in the few games I've played on it.